The Economist, volume 9200, 2021

The Economist, volume 9244, 2021

Human Image Recognition

Background

My approach to AI started in 2016 when I made my first robotic performance: a robot filmed several people on a green background and then sent their video on a YouTube channel titled People At An Exhibition (link). Later I worked on GANs and image generation from famous datasets such as Caltech 256 (2017).Having understood the blindness of machine learning processes, I decided to stop in the construction of new images and to deepen the relationship between programmers and the algorithms they created.Shortly before this pandemic I had managed to establish a dialogue with some scientists from MIT in Boston. Discussing with them gave me a number of insights into Artificial Intelligence, not as an abstract entity abstruse from man, but as an organic system of hybrid human-synthetic operations. Among the peculiarities that emerged, one in particular caught my attention. Most AIs base their high rates of accuracy and effectiveness on a small number of datasets. This makes these benchmarks biased. On the one hand, no scientist wants to be confronted with different datasets that could sink the benchmarks; on the other hand, as spectators, we are confronted with results that are exceptional (see the evolution of StyleGAN or TecoGAN) but fragile in their partiality.

This is a bias inherent in the AI epic, which I believe should also be taken into account in the field of art. Having abandoned the recent summer of AI, I have decided to confront this critical issue, trying on the one hand to reflect on the concept of the anatomy of neural networks and their tangibility and preservation. On the other hand, and this is the case with this project, I tried to think like a machine, reflecting on the concepts of memory and identity.

Project description

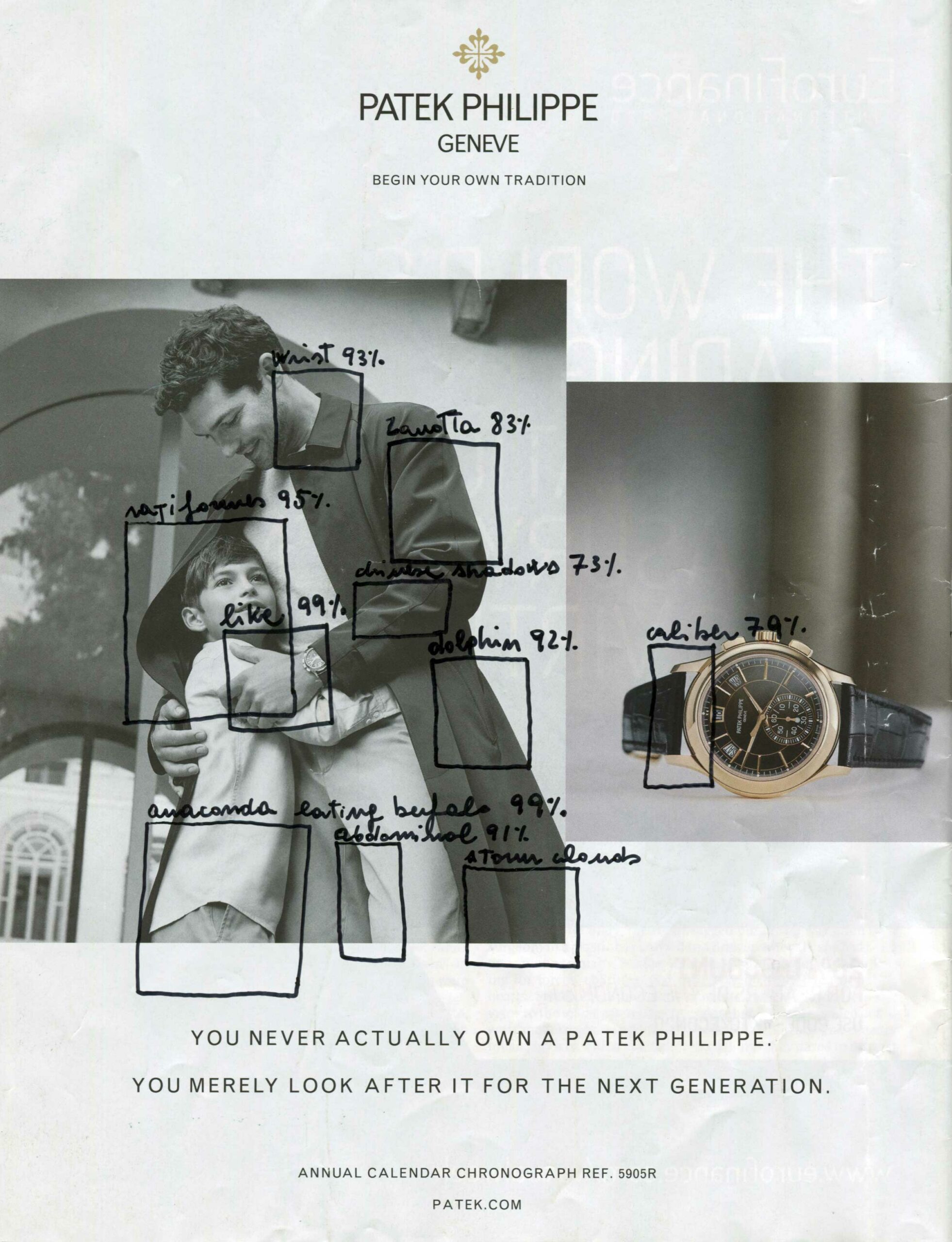

In this work I observe with an autistic and specialized way (exactly like a machine) different portions of the same image and I try to hypothesize, for each of them, what it is about. I also try to give a value to the degree of confidence with which I propose my description. I try to look as a machine would look, extremely careful and precise but completely unable to understand the value of the elements it identifies, their relationship and the context that hosts them, may have.

The result is a series of readings that tell about me, about my visual memory. It is like reconstructing a visual cultural dataset on which I have drawn and with which I have structured my gaze.

This operation will be carried out on the back cover of a year’s worth of magazines from The Economist to which I subscribed in 2019 in anticipation of the first lockdown in a desperate attempt to stay hooked to a series of global economic and cultural reasoning that I was afraid of losing with the gradual closures. The magazine subscription started exactly with the lockdown in 2019 and will expire shortly in the coming weeks.

The choice falls on these images because I am interested in linking my planning to a precise historical moment and I find in magazines and newspapers, purely analog, an interesting support. In this case, the fact that it is a precise year of the magazine, which also corresponds to the only year in which I subscribed, contributes to and consolidates the idea of a corpus. I also like the randomness of the images on which I work. They are, in fact, images accompanying a textual apparatus that I did not look for but to which I subscribed even though I was not directly interested in them. So I find them completely neutral, I consider them vanilla-images.

The result is the 52 wall frames containing the modified fourth covers.

Leroy Merlin, Human Image Recognition, #001, 60 x 90, 2021

In this iteration of the work, for example, I took in analysis a printed poster sold by Leroy Merlin. And down here an image sold by IKEA. The purpose is to expand the “field of work” to those images that are considered to be culturally overwhelming due to their massive presence in the world. The attempt I am doing at humanising the way algorithms look at things has to be done on images where for me to forget the context is incredibly hard.

I realized the in the way algorithms look at things, you always have a complete understanding of the whole process but you could only have a grasp on the departing dataset: that is the only discrete and tangible thing. The rest is unknown: the reasoning and the resulting “recognition”. In my case it is exactly the opposite: the final image is my station of departure whereas the dataset is the only thing I do not know yet. Through those squares, I am letting it arise from my head.